Robots.txt Generator

About Robots.txt Generator Tool

There are bots or spiders on search engines that crawl up a page to analyze the content. By analyzing the relevancy of content, they help search engines to display the webpages on SERPs to the best-suited audience.

Robot exclusion protocol is the medium that helps a website to communicate with bots. These are primarily used to allow or restrict web robots to crawl a webpage. Though it is often overlooked, it can boost SEO with no efforts.

Robots.txt can be generated using an online robot txt generator. These files are present on every website. However, not many developers know how to make the best out of robots.txt.

What is robots.txt?

Robot exclusion standard (robots.txt) is a text file that allows or restricts web robots to crawl up a page. These files are placed in the root directory of the site. For example, https://webmasterfly.com/tools/robots.txt.

These files contain information that commands robots to visit or not visit a web page. These instructions are written in a standard format. These can be generated with proper format using a google robots.txt generator.

Not every bot follows the instruction of robots.txt file. However, the ones that do visit or leave the webpage as per the command of robot exclusion standard files. If there is no robots.txt file on a website, the robots freely scrawl up every page.

Importance of robots.txt

The importance of these files is often overlooked. Not only are these essential to blocking unwanted web robots from entering a page, but these files can also enhance SEO. An adequately generated file from a WordPress robots.txt generator or any other tool is the right way to go about it.

This is done to restrict web robots from visiting unwanted pages. The information gathered by these bots helps search engines to determine the relevancy and ranking of a website. But GoogleBot's advise developers to block robots crawling unimportant pages to avoid the wastage of crawl budget.

Crawl budget is the limit of the number of pages that a bot can crawl. For effective results, you only want bots to crawl your valuable pages. Also, as explained by veteran Neil Patel, robots.txt files are "SEO juice."

How to use robots.txt?

The proper use of robots.txt files is a little technical. These need to be written in a standard format. There can’t be a tiny mistake in writing files. Any good google robots txt generator will get the format right for you.

Also, these files are case sensitive. To use the robot exclusion protocol, these files must be placed carefully in the right directory. It is essential that robots are blocked form pages that are duplicate or not important. It would help if you never blocked pages from search engines. Lastly, do not forget to optimize your robots.txt files.

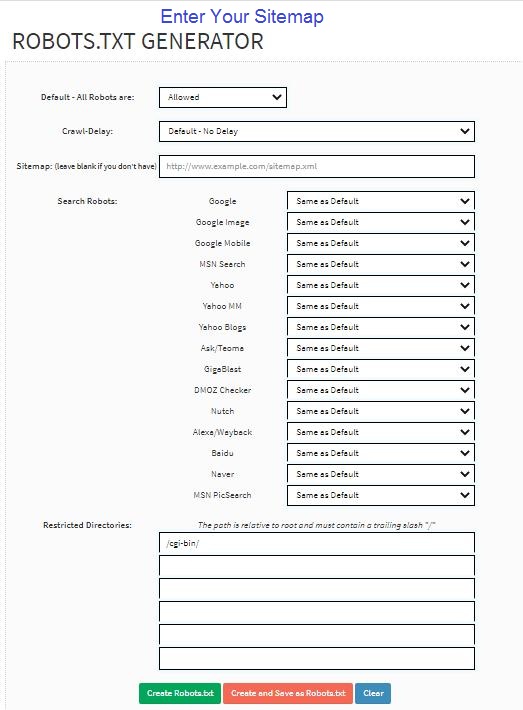

How to easily generate robots.txt

If you find it too technical to develop robots.txt files using a plain text editor, there is an easy way to do it. You can use any free robot.txt generator, such as one provided by WebmasterFly to create robots.txt files without a hassle.

Screenshot -

- Popular SEO Tools

- Free Grammar Checker

- Free Alexa Rank Checker

- Free Broken Links Checker

- Free Article Rewriter

- Free Plagiarism Checker

- Free Backlink Maker

- Free Google Ping

- Free XML Sitemap Generator

- Free Backlink Checker

- Free Domain Authority Checker

- Free Page Authority Checker

- Free Meta Tag Generator